A panel of senior experts, including two pioneers in the field, have issued a warning that the use of advanced artificial intelligence systems poses a threat to society. They stress the need for AI companies to be held accountable for any negative consequences caused by their products.

On Tuesday, a statement was issued in anticipation of a summit on AI safety at Bletchley Park next week. This statement was made by global leaders, technology companies, scholars, and members of civil society.

One of the authors of the proposed policies from 23 professionals stated that it was extremely irresponsible to continue developing more advanced AI systems without first ensuring their safety.

Stuart Russell, a computer science professor at the University of California, Berkeley, stated that it is crucial to take advanced AI systems seriously. He emphasized that these systems should not be treated as mere toys and that it is reckless to enhance their abilities without first ensuring their safety.

He stated that there are stricter regulations on sandwich shops compared to AI companies.

The document recommended that governments implement a variety of policies, such as:

One-third of AI research and development funding from governments and one-third of AI R&D resources from companies will be dedicated to ensuring the safe and ethical use of systems.

Granting independent auditors entry to AI facilities.

Implementing a licensing structure for constructing advanced models.

If potentially harmful abilities are detected in their models, AI companies should implement targeted safety protocols.

Holding tech companies accountable for avoidable damages caused by their AI technology.

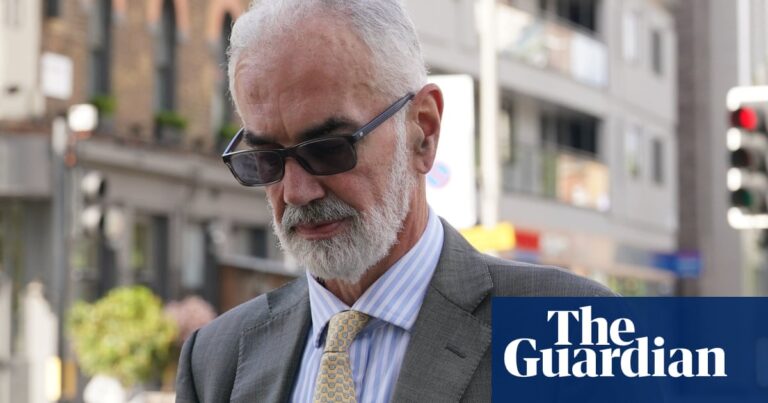

Additional authors of the paper are Geoffrey Hinton and Yoshua Bengio, both renowned figures in the field of AI and recipients of the 2018 ACM Turing award, considered the highest honor in computer science, for their contributions to AI research.

Both Hinton and Bengio have been invited as part of the 100 guests attending the summit. Hinton left his position at Google this year to raise awareness about the potential “existential risk” associated with digital intelligence, while Bengio, a computer science professor at the University of Montreal, joined him and thousands of other experts in signing a letter in March that called for a halt in large-scale AI experiments.

The co-authors of the proposals also feature renowned figures such as Yuval Noah Harari, author of the bestseller Sapiens, Nobel laureate in economics Daniel Kahneman, and University of Toronto AI professor Sheila McIlraith, as well as acclaimed Chinese computer scientist Andy Yao.

Bypass the advertisement for the newsletter.

after newsletter promotion

The writers cautioned that poorly designed AI systems have the potential to worsen social inequality, undermine our fields of expertise, disrupt social order, facilitate widespread criminal or terrorist acts, and diminish our collective perception of reality, which is crucial for society.

Experts have cautioned about the potential capabilities of current AI systems, which may lead to the development of independent systems capable of planning, achieving objectives, and taking action in the real world. The ChatGPT tool, powered by the GPT-4 AI model created by OpenAI, has demonstrated the ability to conduct chemistry experiments, navigate the internet, and utilize other AI models and software tools.

Creating highly advanced autonomous AI could result in systems that independently chase after unwanted objectives, and it may be difficult for us to control them.

Some other suggestions in the document include: requiring the reporting of incidents where models exhibit concerning behavior; implementing measures to prevent harmful models from reproducing; and granting regulators the authority to temporarily halt the development of AI models that display dangerous behaviors.

The upcoming safety summit will center on the potential dangers presented by AI, including its potential role in creating dangerous biological weapons and avoiding human oversight. The UK government is collaborating with other attendees to create a statement that will highlight the magnitude of the risk posed by cutting-edge AI systems. Although the summit will address the risks associated with AI and propose ways to address them, it is not anticipated to formally establish a worldwide regulatory organization.

Certain experts in the field of AI believe that concerns about the potential danger to humanity are exaggerated. Yann LeCun, who won the 2018 Turing award together with Bengio and Hinton and currently serves as the chief AI scientist at Mark Zuckerberg’s Meta, also attended the summit and stated to the Financial Times that the idea of AI destroying humans is absurd.

However, the writers of the policy paper have stated that in the event that highly advanced autonomous AI systems were to appear at this time, the global community would not have the knowledge or capability to ensure their safety or perform safety evaluations on them. They further stated, “Even if we were able to do so, many nations lack the necessary organizations to prevent abuse and enforce proper protocols.”

Source: theguardian.com